“Unit” testing of the ehratmworkflow package with clibatchtest.py

clibatchtest.py - Command Line Interface batch test - is a program that will iterate through a list of specified wfnamelists, running each and reporting in an organised way its success or failure. Its primary intent is to serve in the way that unit tests would, iterating through a set of problems that are all expected to “pass.” Use of this kind of utility can be particularly helpful when dealing with a new environment or after making underlying code modifications.

The presentation here will proceed as follows, in an incremental fashion

Use of clibatchtest.py to run a single case (including debugging features)

Use of clibatchtest.py to run more than one case

Testing with portable collection of wfnamelists

Running a simple case

This section introduces the basics of clibatchtest.py usage, beginning with a simple wfnamelist, and then introducing an error in an effort to illustrate how we might start troubleshooting the problem.

The program is found in the repository in

packages/ehratmworkflow/tests/clibatchtest.py

and, to work correctly, will need correct environment set up in the accompanying file setup_test_env.sh.

To start, since you’ll be editing clibatchtest.py to specify the location of wfnamelists, and to enable troubleshooting when necessary, and you may need to edit setup_test_env.sh, it may be a good idea to use a copy of this outside of the repository in a working directory. That’s what the following will assume.

In the following, we will

use setup_test_env.sh to set up necessary environment variables and set up a compatible PYTHON environment

copy in some test files that we used in the User Perspective tests

edit clibatchtest.py to run a single case

introduce an error in the wfnamelist and demonstrate how we can begin to obtain more information for troubleshooting the problem

setup_test_env.sh

About eight environment variables need to be defined, pointing to the repository location, the location of the WPS/WRF and FlexpartWRF distributions, and location of met data. In addition to a couple more variables, the CONDA_ENV variable assumes that you have available a conda environment of the specified name, one which is compatible with the EHRATM system. This was discussed in the User Perspective document and, for the purposes of this demonstration, if you don’t want to try to set up an environment you can hard-code it (as suggested in the User Perspective document) in this case by creating a link to my own, working python3. This is not the normal way you would do things, but it’s meant to allow you to temporarily avoid the complexities of getting the right Python environment set up.

Setup of the simple test case

For this example, we will copy into our working directory the following wfnamelist file that was used in the User Perspective </UserPerspective/index> document, in the repository directory: packages/ehratmworkflow/docs/UserPerspective/sample_workflows/wfnamelist.gfsungrib

&control

workflow_list = 'ungrib'

workflow_rootdir = '/dvlscratch/ATM/morton/tmp'

/

&time

start_time = '2021051415'

end_time = '2021051421'

wrf_spinup_hours = 0

/

&grib_input1

type = 'gfs_ctbto'

subtype = '0.5.fv3'

hours_intvl = 3

rootdir = '/ops/data/atm/live/ncep'

/

The user will want to change the workflow_rootdir value to one of their own writable directories.

Then, clibatchtest.py will need to be edited

clibatchtest.py is set up under the assumption that it will be used from within the repository. This is probably more convenient for the experienced developer who is already somewhat familiar with this code, but for this initial exposure, the following assumes that we are - in accordance with guidance given above - using copies of clibatchtest.py and setup_test_env.sh in a working directory (in this example case, /home/consult/morton/clibatchtestdir/)

The copy of clibatchtest.py that is in this directory should be modified to 1) use the epython3 symlink described above; 2) use the current directory as the directory that contains the wfnamelists to be tested. In clibatchtest.py, this means

Change

PYTHON = ‘python3’to‘./epython3’Comment out existing definition of WFNML_TESTDIR and set it to the current working directory,

‘./’

clibatchtest.py relies on several (currently eight) environment variables to be set correctly, and these are defined in setup_test_env.sh. Users will need to adjust these to known distributions of WRF and Flexpart WRF, and a known good copy / branch of the high-res-atm repository. Additionally, the TEMP_ROOTDIR variable will need to be set to an existing scratch location that the user has write permissions for.

Finally, if you are using the epython3 symlink for Python, you should comment out the last statement in the file that sets up a conda environment.

Finally, we need to define the list of wfnamelists to test. There is a single variable in clibatchtest.py, WFNML_TESTLIST, representing a Python list of these wfnamelists to be tested. The program assumes that all of the files are in the WFNML_TESTDIR defined above.

Although this section of clibatchtest.py is long, and looks very complicated, the reality is that most of it is commented out in a way that the knowledgeable user can construct a list of all possible tests, subsets of these tests, or single tests. The bottom line, however, is that at the end of this section, the uncommented assignment to WFNML_TESTLIST should be a Python list of the names of the wfnamelists to be tested. It’s really that simple.

In our case, we want to test a single, simple wfnamelist, wfnamelist.gfsungrib, that was already copied into the working directory, so in clibatchtest.py we simply set

WFNML_TESTLIST = ['wfnamelist.gfsungrib']

To review, we have

Created copies of clibatchtest.py and setup_test_env.sh into a local working directory. This is done to keep the inexperienced user from accidentially corrupting the repository code. The experienced user will likely work right from the repository and not bother with this.

We have copied a simple wfnamelist into the working directory so that we can demonstrate a test on it

We created a link to “my” Python 3 on devlan, calling it epython3. The experienced user will generally forego this step and make sure they are using the correct conda environment.

We edited variables at the beginning of clibatchtest.py for this particular setup. The experienced user will find that if they just run all of this out of the repository, they won’t need to do this.

We edited setup_test_env.sh for the needed environment variables.

Once this is accomplished, we simply need to make sure that our environment is set, and run clitbatchtest.py for the single test we defined.

In the above example, the Unix return code is displayed which, in this case, a zero implies that the test occurred without any operating system error - the simulation ran. It’s important to note that this does not test the actual output values, just that the system itself ran the test without apparent problems.

Note that, by default (this can be changed), the run directories for each test are retained, and just as in User Perspective, we can go in and experiment or troubleshoot cases.

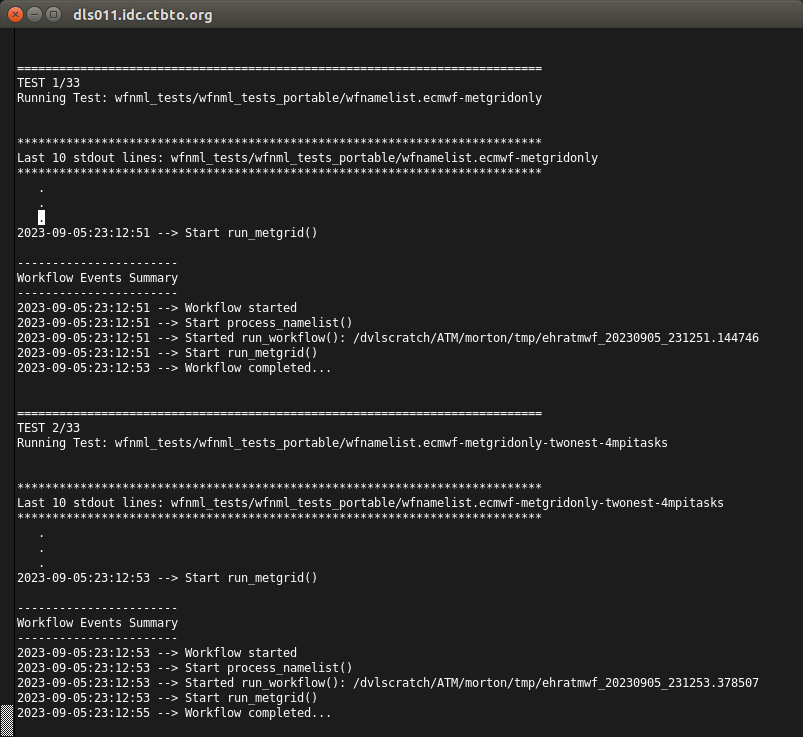

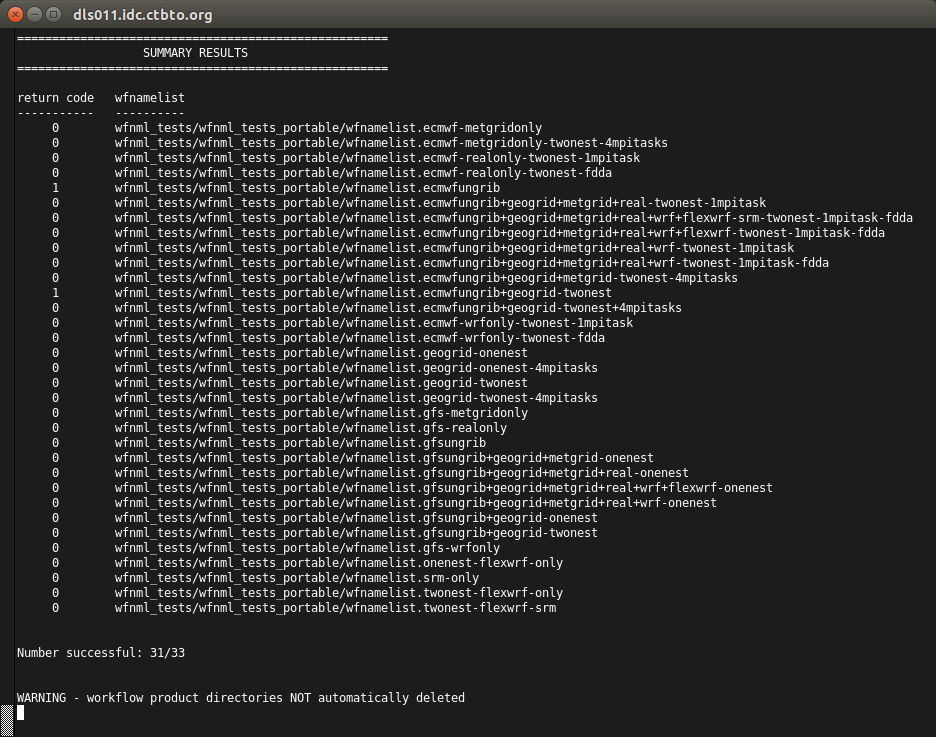

And, that’s it, if we set up WFNML_TESTLIST for a number of tests, they would be run iteratively, with a little bit of information made available for each test, and then a summary statement. The following screenshots show an example of a full set of tests

These figures show that there is a large collection of tests already created which run through single-component to full simulation workflows, and being able to run these through a single command allows us to understand if our system is working correctly.

In order to keep the output of such a test immediately informative, any output produced by the simulations has been suppressed, so by default the user sees only a a brief timeline for each test, as well as a summary. However, when there is a failure it is useful to be able to see debugging output and stack traces, and the next section describes this.

Accessing runtime logging information for a single test

In this section we demonstrate features of clibatchtest.py that allow us to gain additional information that might be helpful for troubleshooting. In this example, we introduce a minor error in the wfnamelist.ungrib, one that I’ve encountered. We will change the subtype = ‘0.5.fv3’ to subtype = ‘0.5’. Since there is no data for this subtype during the period of interest, the simulation will have to fail.

We might start by looking in the listed run directory, /dvlscratch/ATM/morton/tmp/ehratmwf_20230925_011520.564059/, and we find that it’s empty. This implies that something went wrong early, but what? We have nothing to go on. There is a flag in clibatchtest.py, however, that will allow us to enable the output of all simulation stdout and stderr

###########################################################################

# This variable will normally be set to False, allowing clean iteration

# through all tests.

#

# If this is set to true, then the single test (if there is more than

# one listed, only the first one will be executed) will be run with

# stdout/stderr all going to screen, and after execution this program

# will terminate. Since this program by default tries to keep things

# clean, if something goes wrong it can be difficult to understand. So,

# this option allows for all the ugly details to be generated.

####################################

#### FOR DEBUGGING ########

SINGLE_RUN_DEBUGGING = False

####################################

###########################################################################

As the comments say, by setting SINGLE_RUN_DEBUGGING to True, all messages will go to the screen for the first simulation in the list of tests, and then clibatchtest.py will exit. So, if we run it with the option enabled, we get some information that might help the experienced user immediately find the problem (newer GFS files are stored in subdirectory 0.5.fv3, not 0.5).

If that’s not enough information, we can, as the message suggests, specify a more verbose log level, and this is accomplished by adding

log_level = ‘debug’ into the &control section of the wfnamelist.

Because I have made extensive (some might say obsessive) use of DEBUG logger statements in all of the codes, this will firehose much more information than you would ever want, but with patience it hopefully helps to zero in on the problem area.

In this way, we can run through a small or large collection of tests and then, if any fail, with little effort we can gain a great deal more information.

Running two cases

In this example, we run two tests in clibatchtest.py - a single-component geogrid test and a full ECMWF workflow (with fdda). Nothing really new is done in this example - it’s just meant to show additional variety, and how we can process more than one test.

The necessary files are found in the repository UserPerspective/sample_workflows/ dir used in the User Perspective documentation. They are

- wfnamelist.geogrid-twonest-4mpitasks

need to edit workflow_rootdir for your own filesystem

- wfnamelist.full-era-workflow

Edit workflow_rootdir in &control

May need to edit rootdir in &grib_input1 and &grib_input2 if the ERA met files have been moved

small_domain_twonest.nml

- namelist.input.era-twonest-fdda

Edit anne_bypass_namelist_input in &real and &wrf for the correct FULL path to namelist.input.era-twonest-fdda

Edit bypass_user_input in &flexwrf for the correct FULL path to flxp_input.txt.twonest-from-era

Portable Tests for Reproducibility

The wfnamelist approach uses the concept of Fortran namelists, with processing well-supported (though sometimes tricky) by the Python f90nml package. This is a powerful system for processing namelists, but an important shortcoming in the context of setting up automated tests for a variety of users is that there is no native support for using variables within a namelist to represent parameters like paths. For example, many of the previous examples have had scenarios like the following

&control

workflow_list = 'ungrib'

workflow_rootdir = '/dvlscratch/ATM/morton/tmp'

/

&time

start_time = '2021051415'

end_time = '2021051421'

wrf_spinup_hours = 0

/

&grib_input1

type = 'gfs_ctbto'

subtype = '0.5.fv3'

hours_intvl = 3

rootdir = '/ops/data/atm/live/ncep'

/

where the workflow_rootdir in the &control group, and the rootdir in the &grib_input1 group have very specific paths. With more than thirty wfnamelists currently set up for automated batch testing, it would require a great deal of error-prone work to modify them all for different workflow_rootdir or rootdir paths. This is especially bothersome when one considers working on a system with a different file structure. Because the f90nml package - to the best of my knowledge - does not provide any kind of support for conditional values I decided to implement an optional string pattern replacement preprocessing on wfnamelists so that when a specially-coded string is found in the wfnamelist it is replaced with the value found in an environment variable.

For example, the above wfnamelist is written as

&control

workflow_list = 'ungrib'

workflow_rootdir = '#!!#WFN_TEMP_ROOTDIR#!!#/myworkflowdir'

/

&time

start_time = '2021051415'

end_time = '2021051421'

wrf_spinup_hours = 0

/

&grib_input1

type = 'gfs_ctbto'

subtype = '0.5.fv3'

hours_intvl = 3

rootdir = '#!!#WFN_METDATA_NCEP#!!#'

/

The behaviour of clibatchtest.py, upon seeing each wfnamelist is that it will read it line by line and copy it to a temporary wfnamelist, which will actually be used for the test (unknown to the user). If it encounters a string delimited by #!!# … #!!#, it will look for an environment variable that matches the string and, if found, will replace that string with the value of the environment variable. In this way, custom strings can be entered into the wfnameslists without having to edit them, and the same set of test wfnamelists can be used across different user accounts and computing systems.

The preceding might be run like

The wfnamelist would be processed into the following (the general user would not be aware of this)

&control

workflow_list = 'ungrib'

workflow_rootdir = '/dvlscratch/ATM/tipka/scratch/myworkflowdir'

/

&time

start_time = '2021051415'

end_time = '2021051421'

wrf_spinup_hours = 0

/

&grib_input1

type = 'gfs_ctbto'

subtype = '0.5.fv3'

hours_intvl = 3

rootdir = '/ops/data/atm/live/ncep'

/

and then processed with the custom directories correctly encoded.

The use of #!!# … #!!# as delimiter was chosen after some consideration, mostly wanting to make sure that it couldn’t possibly conflict with other string sequences. I know it’s a bit of overkill, and Anne has already commented on this, but I’m afraid it is what it is for now - all of the “portable” wfnamelist test cases have been encoded with this delimiter.

The preprocessing has been implemented with the intent that changing the delimiter to something else would be a matter of simply changing the

statement at the top of src/ehratmworkflow/preprocnml.py to something else (note that I haven’t tested this feature)

Running the portable tests

Running the set of portable tests - the ones that should run successfully (with one intermittent condition, discussed below) on properly configured systems - involves the following. Note the documentation in clibatchtest.py that says the default setup assumes that it is run from within the repository directory packages/ehratmworkflow/tests/. Given that this is essentially a development tool, it is a logical place to run from. If you are going to run elsewhere you will need to modify some of the folllowing appropriately

Editing WFNML_TESTDIR in clibatchtest.py to specify the path to the portable tests. The default is already coded in, expecting these to be in the repository in packages/ehratmworkflow/tests/wfnml_tests_portable/.

Unless debugging, ensure that SINGLE_RUN_DEBUGGING is set to its default value of False

Set up the list WFNML_TESTLIST for the names of the wfnamelists you wish to test. Note that the variable ALL_TESTS in the program contains the list of all wfnamelists, and just setting WFNML_TESTLIST = ALL_TESTS will test them all. Otherwise, edit for the desired set of tests

A number of correctly-set environment variables are expected by clibatchtest.py. These are set in packages/ehratmworkflow/tests/setup_test_env.sh, and most are based on system-specific paths. This file was addressed in previous examples. Note at the end of the file are three WorkFlowNamelist (WFN_) variables that will be used to replace the string patterns discussed above.

Although the above may seem complicated, clibatchtest.py “should” be ready to go simply by editing a few root paths in setup_test_env.sh, setting WFNML_TESTLIST = ALL_TESTS, and running in packages/ehratmworkflow/tests/

On devlan, buffering may make it appear that nothing is happening for a while, but running top in a separate window will allow you to see the various WPS/WRF and FlexpartWRF executables running.

In this case we see that two tests failed (return code of 1). Unfortunately, this is a result of an intermittent memory problem on devlan associated with one of the WPS utilities. You will note that the two failures have the ecmwfungrib component in them, and that’s where it’s failing. If you were to run this test again, it’s quite likely that these two tests would pass, but another test or two with the ecmwfungrib component would fail. This problem has been traced into the WPS util/calc_ecmwf_p.exe executable, and similar problems have been mentioned in various bug reports. So, this is simply something that a user needs to be aware of for now.

Review of troubleshooting

This section is written like a sidebar, just to remind the technical user how they might try to troubleshoot problems like this

Just as a review of troubleshooting, one could look through the batchtest.log and see that this test proceeded as follows

===========================================================================

TEST 11/33

Running Test: /dvlscratch/ATM/morton/git/high-res-atm/packages/ehratmworkflow/tests/wfnml_tests/wfnml_tests_portable/wfnamelist.ecmwfungrib+geogrid+metgrid-twonest-4mpitasks

***************************************************************************

Last 10 stdout lines: /dvlscratch/ATM/morton/git/high-res-atm/packages/ehratmworkflow/tests/wfnml_tests/wfnml_tests_portable/wfnamelist.ecmwfungrib+geogrid+metgrid-twonest-4mpitasks

***************************************************************************

.

.

.

2023-10-05:23:08:47 --> Workflow started

2023-10-05:23:08:47 --> Start process_namelist()

2023-10-05:23:08:47 --> Started run_workflow(): /dvlscratch/ATM/morton/tmp/ehratmwf_20231005_230847.514046

2023-10-05:23:08:47 --> Start run_ungrib()

2023-10-05:23:08:49 --> Start run_ecmwfplevels()

The workflow for this test never made it past the run_ecmwfplevels() operation. One could further go into the provided run directory - /dvlscratch/ATM/morton/tmp/ehratmwf_20231005_230847.514046 in this case - and notice that in the ecmwfplevels_rundir/WPS/ subdir, the expected PRES* output files were not produced. From in that directory, we could run util/calc_ecmwf_p.exe and, in this case, it would likely run correctly, producing the expected output files. And, we might be able to run it another time or two successfully, but eventually we would hit the intermittent error:

.

.

.

136 0.000000 0.9976300001

137 0.000000 1.0000000000

Reading from ECMWF_SFC at time 2014-01-24_00

Found PSFC field in ECMWF_SFC:2014-01-24_00

Operating system error: Cannot allocate memory

Allocation would exceed memory limit

And, if we ran it again, it would probably work again a couple more times.