Workflow Examples for ERA Initialization

Introduction

EHRATM supports the use of custom ERA met files to initialize WRF simulations. Like GFS inputs, the WPS components will ultimately create a set of met_em* files, which can then be used to drive the WRF components. The ERA met files are not, however, part of the default WRF Preprocessing System (WPS), and need to be handled differently. The Vtables for conversion from GRIB2 format to WPS intermediate format are different, the ERA data comes in model and surface levels and needs to be processed into pressure levels, and then metgrid needs to take all of this data to produce the standard set of met_em* files. From that point on, however, WRF processing is the same for GFS and ERA initialized data.

Without a lot of explanation, these examples simply illustrate the use of wfnamelists to perform each of the steps through metgrid in both standalone mode and in a single workflow mode.

These examples are intended to follow on from the previous GFS examples, so it is assumed that the environment variables and test directory (with symbolic link epython3) have been set up in the same way. But, a quick review of the env variables

export PYTHONPATH=<path-to-high-res-atm>/packages/nwpservice/src:<path-to-high-res-atm>/packages/ehratm/src:<path-to-high-res-atm>/packages/ehratmworkflow/src

And, a reminder that copies of all of the following wfnamelists (and other important files) are available in the repository directory packages/ehratmworkflow/docs/UserPerspective/sample_workflows/

$ tree sample_workflows sample_workflows ├── flexp_input.txt ├── namelist.input.gfs_twonest ├── small_domain_twonest.nml ├── wfnamelist.donothing ├── wfnamelist.ecmwf-fullwps-twonest-4mpitasks ├── wfnamelist.ecmwf-metgridonly-twonest-4mpitasks ├── wfnamelist.ecmwfungrib ├── wfnamelist.flexwrf+srm ├── wfnamelist.fullworkflow ├── wfnamelist.fullwps-twonest ├── wfnamelist.geogrid-twonest-4mpitasks ├── wfnamelist.gfs-metgridonly ├── wfnamelist.gfs-realonly ├── wfnamelist.gfsungrib └── wfnamelist.gfs-wrfonly 0 directories, 15 files

Finally, a copy of a recent Workflow Namelist Reference document is available for understanding the format and options within a wfnamelist.

ERA ungrib

Unlike global GFS and ECMWF met data, ERA data is not stored in a standard place on CTBTO machines (in part, because these often represent varying, custom, regional domains for specific purposes). Therefore, EHRATM has adopted the convention that the ERA data it looks for will be stored in a similar kind of directory hierarchy that the global data is stored in, namely

<path-to-root-dir>/YYYY/MM/DD/

and the files will be named with an EA prefix, followed by a YYYYMMDDHH date/time string, followed by either a .ml (for 3D model levels) or .sfc (for 2D surface) extension. So, for example, in the following case we have a root data directory of /dvlscratch/ATM/morton/EHRATM_ERA_TESTDATA/, that looks as follows

$ tree /dvlscratch/ATM/morton/EHRATM_ERA_TESTDATA

/dvlscratch/ATM/morton/EHRATM_ERA_TESTDATA

└── 2014

└── 01

└── 24

├── EA2014012400.ml

├── EA2014012400.sfc

├── EA2014012403.ml

├── EA2014012403.sfc

├── EA2014012406.ml

└── EA2014012406.sfc

3 directories, 6 files

The workflow namelist in this case consists of two ungrib entries, one for the model level data and one for the surface data (which may be located in the same directory). ehratmwf.py will recognize that for this type of data, once it has been ungribbed it will be processed by a WPS utility to created the pressure level ungribbed files.

It’s important to note that the ungrib components in this case use custom Vtable files that aren’t a part of the default WRF distributions. EHRATM is set up with default locations for these files, but it is possible for the user to specify their own within the namelist. In this example, for simplicity, we use the EHRATM default locations, so they don’t need to be part of the wfnamelist

wfnamelist.ecmwfungrib

&control

workflow_list = 'ungrib'

/

&time

start_time = '2014012400'

end_time = '2014012406'

wrf_spinup_hours = 0

/

&grib_input1

type = 'ecmwf_ml'

hours_intvl = 3

rootdir = '/dvlscratch/ATM/morton/EHRATM_ERA_TESTDATA'

/

&grib_input2

type = 'ecmwf_sfc'

hours_intvl = 3

rootdir = '/dvlscratch/ATM/morton/EHRATM_ERA_TESTDATA'

/

$ ./epython3 ehratmwf.py -n wfnamelist.ecmwfungrib

2023-09-19:19:03:08 --> Workflow started

2023-09-19:19:03:08 --> Start process_namelist()

2023-09-19:19:03:08 --> Started run_workflow(): /dvlscratch/ATM/morton/tmp/ehratmwf_20230919_190308.462201

2023-09-19:19:03:08 --> Start run_ungrib()

2023-09-19:19:03:11 --> Start run_ecmwfplevels()

-----------------------

Workflow Events Summary

-----------------------

2023-09-19:19:03:08 --> Workflow started

2023-09-19:19:03:08 --> Start process_namelist()

2023-09-19:19:03:08 --> Started run_workflow(): /dvlscratch/ATM/morton/tmp/ehratmwf_20230919_190308.462201

2023-09-19:19:03:08 --> Start run_ungrib()

2023-09-19:19:03:11 --> Start run_ecmwfplevels()

2023-09-19:19:03:13 --> Workflow completed...

The structure of the output directory is a little more busy than we’ve seen with GFS.

$ ls -F /dvlscratch/ATM/morton/tmp/ehratmwf_20230919_190308.462201

ecmwfplevels_namelist.wps namelist.wps_ecmwf_sfc ungrib_rundir_ecmwf_ml/

ecmwfplevels_rundir/ tmpmetdata-ecmwf_ml/ ungrib_rundir_ecmwf_sfc/

namelist.wps_ecmwf_ml tmpmetdata-ecmwf_sfc/

The ungribbed files are found in separate run directories for the model level inputs and the surface level inputs. As in the case of the GFS examples, these run directories are complete, and the interested user can go in here and experiment or troubleshoot, if desired.

In both dir listings, the ungribbed output files are the first three listed, with the timestamps.

$ ls -F /dvlscratch/ATM/morton/tmp/ehratmwf_20230919_190308.462201/ungrib_rundir_ecmwf_ml/WPS

ECMWF_ML:2014-01-24_00 GRIBFILE.AAB@ namelist.wps.all_options ungrib.exe@

ECMWF_ML:2014-01-24_03 GRIBFILE.AAC@ namelist.wps.fire* ungrib.log

ECMWF_ML:2014-01-24_06 link_grib.csh* namelist.wps.global util/

geogrid/ metgrid/ namelist.wps.nmm Vtable@

geogrid.exe@ metgrid.exe@ README

GRIBFILE.AAA@ namelist.wps ungrib/

$ ls -F /dvlscratch/ATM/morton/tmp/ehratmwf_20230919_190308.462201/ungrib_rundir_ecmwf_sfc/WPS

ECMWF_SFC:2014-01-24_00 GRIBFILE.AAB@ namelist.wps.all_options ungrib.exe@

ECMWF_SFC:2014-01-24_03 GRIBFILE.AAC@ namelist.wps.fire* ungrib.log

ECMWF_SFC:2014-01-24_06 link_grib.csh* namelist.wps.global util/

geogrid/ metgrid/ namelist.wps.nmm Vtable@

geogrid.exe@ metgrid.exe@ README

GRIBFILE.AAA@ namelist.wps ungrib/

Finally, the execution of this wfnamelist has also resulted in the production of pressure level ungribbed files in its own run directory, producing the timestamped ungribbed files with the PRES prefix. When we run metgrid, we will need to specify the locations of all of these ungribbed files.

$ ls -F /dvlscratch/ATM/morton/tmp/ehratmwf_20230919_190308.462201/ecmwfplevels_rundir/WPS

ecmwf_coeffs@ geogrid/ namelist.wps.all_options README

ECMWF_ML:2014-01-24_00@ geogrid.exe@ namelist.wps.fire* ungrib/

ECMWF_ML:2014-01-24_03@ link_grib.csh* namelist.wps.global ungrib.exe@

ECMWF_ML:2014-01-24_06@ logfile.log namelist.wps.nmm util/

ECMWF_SFC:2014-01-24_00@ metgrid/ PRES:2014-01-24_00

ECMWF_SFC:2014-01-24_03@ metgrid.exe@ PRES:2014-01-24_03

ECMWF_SFC:2014-01-24_06@ namelist.wps PRES:2014-01-24_06

Note that the executable for producing the pressure level files is, with respect to the above directory, util/calc_ecmwf_p.exe, and, like in the other run directories, we can run this alone for experimenting and troubleshooting - the data is already set up for this.

Simple two-nest geogrid

This is exactly the same as the two-nest geogrid illustrated for GFS, but it’s being repeated here for completeness. The generation of the geogrid products is independent of the type of met data input being used. In fact, if you’ve saved your geogrid output from the GFS examples, you don’t even need to do the following - you can just use its path when running metgrid in the next step. This, hopefully, illustrates the asynchronous capabilities of the EHRATM worfklow component system - you don’t have to run full workflows when it doesn’t make sense.

For this one, we’ll use wfnamelist.geogrid-twonest-4mpitasks (the same one we used for GFS case example), which refers to the domain definition namelist, small_domain_twonest.nml (both of which I copy to my local dir for the following test). Note that most of the file/dir entries in the wfnamelist will support full pathnames. If not used, they refer to the current working directory.

The domain(s) created by geogrid are defined in the domain definition namelist, small_domain_twonest.nml

&domain_defn

parent_id = 1, 1,

parent_grid_ratio = 1, 3,

i_parent_start = 1, 20,

j_parent_start = 1, 20,

e_we = 51, 19,

e_sn = 42, 16,

geog_data_res = '10m', '5m'

dx = 30000,

dy = 30000,

map_proj = 'lambert',

ref_lat = 50.00,

ref_lon = 5.00,

truelat1 = 60.0,

truelat2 = 30.0,

stand_lon = 5.0,

/

whose path (in this case, a relative path) is specified in the wfnamelist, wfnamelist.geogrid-twonest-4mpitasks

&control

workflow_list = 'geogrid'

workflow_rootdir = '/dvlscratch/ATM/morton/tmp'

/

&domain_defn

domain_defn_path = 'small_domain_twonest.nml'

/

&geogrid

num_mpi_tasks = 4

/

$ ./epython3 ehratmwf.py -n wfnamelist.geogrid-twonest-4mpitasks

2023-09-19:22:53:05 --> Workflow started

2023-09-19:22:53:05 --> Start process_namelist()

2023-09-19:22:53:05 --> Started run_workflow(): /dvlscratch/ATM/morton/tmp/ehratmwf_20230919_225305.871366

2023-09-19:22:53:05 --> Start run_geogrid()

-----------------------

Workflow Events Summary

-----------------------

2023-09-19:22:53:05 --> Workflow started

2023-09-19:22:53:05 --> Start process_namelist()

2023-09-19:22:53:05 --> Started run_workflow(): /dvlscratch/ATM/morton/tmp/ehratmwf_20230919_225305.871366

2023-09-19:22:53:05 --> Start run_geogrid()

2023-09-19:22:53:16 --> Workflow completed...

Again, note the location of the run directory, as we’ll need this information to run the standalone metgrid component.

$ ls -F /dvlscratch/ATM/morton/tmp/ehratmwf_20230919_225305.871366/geogrid_rundir/WPS

geo_em.d01.nc geogrid.log.0001 metgrid.exe@ namelist.wps.nmm

geo_em.d02.nc geogrid.log.0002 namelist.wps README

geogrid/ geogrid.log.0003 namelist.wps.all_options ungrib/

geogrid.exe@ link_grib.csh* namelist.wps.fire* ungrib.exe@

geogrid.log.0000 metgrid/ namelist.wps.global util/

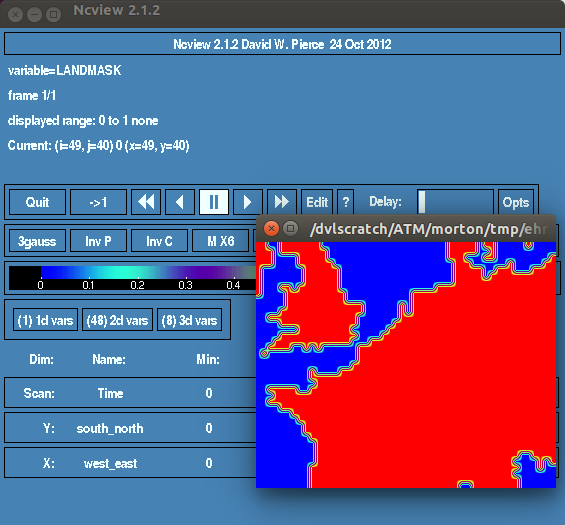

The geogrid output files are geo_em.d0?.nc, and can be viewed with the ncview utility (if it’s not in the general system path, a copy of the executable is available in the repository, misc/utilities/ncview)

$ ncview /dvlscratch/ATM/morton/tmp/ehratmwf_20230919_225305.871366/geogrid_rundir/WPS/geo_em.d01.nc

Simple two-nest metgrid

For this one, we’ll use wfnamelist.ecmwf-metgridonly-twonest-4mpitasks

Because it is standalone, we need to specify where it can find the ungrib and geogrid files produced above. You will obviously need to edit for your own run directories. This is different from the simple GFS case because we need to specify the locations of three sets of ungribbed files - model level, surface level, and pressure level - using the output directories from the previous two components.

&control

workflow_list = 'metgrid'

/

&time

start_time = '2014012400'

end_time = '2014012403'

wrf_spinup_hours = 0

/

&metgrid

ug_prefix_01 = 'ECMWF_ML'

ug_path_01 = '/dvlscratch/ATM/morton/tmp/ehratmwf_20230919_190308.462201/ungrib_rundir_ecmwf_ml/WPS'

ug_prefix_02 = 'ECMWF_SFC'

ug_path_02 = '/dvlscratch/ATM/morton/tmp/ehratmwf_20230919_190308.462201/ungrib_rundir_ecmwf_sfc/WPS'

ug_prefix_03 = 'PRES'

ug_path_03 = '/dvlscratch/ATM/morton/tmp/ehratmwf_20230919_190308.462201/ecmwfplevels_rundir/WPS'

geogrid_path = '/dvlscratch/ATM/morton/tmp/ehratmwf_20230919_225305.871366/geogrid_rundir/WPS'

num_nests = 2

hours_intvl = 3

num_mpi_tasks = 4

/

And then, we run

$ ./epython3 ehratmwf.py -n wfnamelist.ecmwf-metgridonly-twonest-4mpitasks

2023-09-19:23:04:55 --> Workflow started

2023-09-19:23:04:55 --> Start process_namelist()

2023-09-19:23:04:55 --> Started run_workflow(): /dvlscratch/ATM/morton/tmp/ehratmwf_20230919_230455.938253

2023-09-19:23:04:55 --> Start run_metgrid()

-----------------------

Workflow Events Summary

-----------------------

2023-09-19:23:04:55 --> Workflow started

2023-09-19:23:04:55 --> Start process_namelist()

2023-09-19:23:04:55 --> Started run_workflow(): /dvlscratch/ATM/morton/tmp/ehratmwf_20230919_230455.938253

2023-09-19:23:04:55 --> Start run_metgrid()

2023-09-19:23:04:58 --> Workflow completed...

In viewing excerpts of the run directory listing, we see links to all of the necessary geogrid and ungrib files (model level, surface level and pressure level) as well as the met_em* output files. This complex run directory is all set up for another execution, if desired for experimental or troubleshooting purposes!

$ ls -l /dvlscratch/ATM/morton/tmp/ehratmwf_20230919_230455.938253/metgrid_rundir/WPS

total 27204

lrwxrwxrwx. 1 morton consult 108 Sep 19 23:04 ECMWF_ML:2014-01-24_00 -> /dvlscratch/ATM/morton/tmp/ehratmwf_20230919_190308.462201/ungrib_rundir_ecmwf_ml/WPS/ECMWF_ML:2014-01-24_00

lrwxrwxrwx. 1 morton consult 108 Sep 19 23:04 ECMWF_ML:2014-01-24_03 -> /dvlscratch/ATM/morton/tmp/ehratmwf_20230919_190308.462201/ungrib_rundir_ecmwf_ml/WPS/ECMWF_ML:2014-01-24_03

lrwxrwxrwx. 1 morton consult 110 Sep 19 23:04 ECMWF_SFC:2014-01-24_00 -> /dvlscratch/ATM/morton/tmp/ehratmwf_20230919_190308.462201/ungrib_rundir_ecmwf_sfc/WPS/ECMWF_SFC:2014-01-24_00

lrwxrwxrwx. 1 morton consult 110 Sep 19 23:04 ECMWF_SFC:2014-01-24_03 -> /dvlscratch/ATM/morton/tmp/ehratmwf_20230919_190308.462201/ungrib_rundir_ecmwf_sfc/WPS/ECMWF_SFC:2014-01-24_03

lrwxrwxrwx. 1 morton consult 91 Sep 19 23:04 geo_em.d01.nc -> /dvlscratch/ATM/morton/tmp/ehratmwf_20230919_225305.871366/geogrid_rundir/WPS/geo_em.d01.nc

lrwxrwxrwx. 1 morton consult 91 Sep 19 23:04 geo_em.d02.nc -> /dvlscratch/ATM/morton/tmp/ehratmwf_20230919_225305.871366/geogrid_rundir/WPS/geo_em.d02.nc

.

.

.

-rw-r--r--. 1 morton consult 11547876 Sep 19 23:04 met_em.d01.2014-01-24_00:00:00.nc

-rw-r--r--. 1 morton consult 11547876 Sep 19 23:04 met_em.d01.2014-01-24_03:00:00.nc

-rw-r--r--. 1 morton consult 1552892 Sep 19 23:04 met_em.d02.2014-01-24_00:00:00.nc

-rw-r--r--. 1 morton consult 1552892 Sep 19 23:04 met_em.d02.2014-01-24_03:00:00.nc

.

.

.

lrwxrwxrwx. 1 morton consult 101 Sep 19 23:04 PRES:2014-01-24_00 -> /dvlscratch/ATM/morton/tmp/ehratmwf_20230919_190308.462201/ecmwfplevels_rundir/WPS/PRES:2014-01-24_00

lrwxrwxrwx. 1 morton consult 101 Sep 19 23:04 PRES:2014-01-24_03 -> /dvlscratch/ATM/morton/tmp/ehratmwf_20230919_190308.462201/ecmwfplevels_rundir/WPS/PRES:2014-01-24_03

.

.

.

Full WPS ungrib + geogrid + metgrid Workflow

In general, we will be interested in a single wfnamelist that defines a full workflow. The above examples were provided as more of an academic demonstration of how components can be chained together separately for asynchronous workflows, and specifically demonstrated the added complexity of the ERA inputs. In the following, we define the entire workflow in one wfnamelist. In this scenario, we will not need to keep track of where the geogrid and ungrib outputs are - the ehratmwf.py will handle all of that.

wfnamelist.ecmwf-fullwps-twonest-4mpitasks

&control

workflow_list = 'ungrib', 'geogrid', 'metgrid'

/

&time

start_time = '2014012400'

end_time = '2014012403'

wrf_spinup_hours = 0

/

&grib_input1

type = 'ecmwf_ml'

hours_intvl = 3

rootdir = '/dvlscratch/ATM/morton/EHRATM_ERA_TESTDATA'

/

&grib_input2

type = 'ecmwf_sfc'

hours_intvl = 3

rootdir = '/dvlscratch/ATM/morton/EHRATM_ERA_TESTDATA'

/

&domain_defn

domain_defn_path = 'small_domain_twonest.nml'

/

&geogrid

num_mpi_tasks = 4

/

&metgrid

hours_intvl = 3

num_mpi_tasks = 4

/

Then, we run it, again noting the run directory which, in this case, will include run directories for ungrib, geogrid and metgrid

$ ./epython3 ehratmwf.py -n wfnamelist.ecmwf-fullwps-twonest-4mpitasks

2023-09-19:23:21:36 --> Workflow started

2023-09-19:23:21:36 --> Start process_namelist()

2023-09-19:23:21:36 --> Started run_workflow(): /dvlscratch/ATM/morton/tmp/ehratmwf_20230919_232136.681431

2023-09-19:23:21:36 --> Start run_ungrib()

2023-09-19:23:21:38 --> Start run_ecmwfplevels()

2023-09-19:23:21:39 --> Start run_geogrid()

2023-09-19:23:21:48 --> Start run_metgrid()

-----------------------

Workflow Events Summary

-----------------------

2023-09-19:23:21:36 --> Workflow started

2023-09-19:23:21:36 --> Start process_namelist()

2023-09-19:23:21:36 --> Started run_workflow(): /dvlscratch/ATM/morton/tmp/ehratmwf_20230919_232136.681431

2023-09-19:23:21:36 --> Start run_ungrib()

2023-09-19:23:21:38 --> Start run_ecmwfplevels()

2023-09-19:23:21:39 --> Start run_geogrid()

2023-09-19:23:21:48 --> Start run_metgrid()

2023-09-19:23:21:50 --> Workflow completed...

$ tree -L 2 -F /dvlscratch/ATM/morton/tmp/ehratmwf_20230919_232136.681431

/dvlscratch/ATM/morton/tmp/ehratmwf_20230919_232136.681431

├── ecmwfplevels_namelist.wps

├── ecmwfplevels_rundir/

│ ├── GEOG_DATA -> /dvlscratch/ATM/morton/WRFDistributions/WRFV4.3/GEOG_DATA/

│ ├── WPS/

│ └── WRF/

├── geogrid_namelist.wps

├── geogrid_rundir/

│ ├── GEOG_DATA -> /dvlscratch/ATM/morton/WRFDistributions/WRFV4.3/GEOG_DATA/

│ ├── WPS/

│ └── WRF/

├── metgrid_rundir/

│ ├── GEOG_DATA -> /dvlscratch/ATM/morton/WRFDistributions/WRFV4.3/GEOG_DATA/

│ ├── WPS/

│ └── WRF/

├── namelist.wps

├── namelist.wps_ecmwf_ml

├── namelist.wps_ecmwf_sfc

├── tmpmetdata-ecmwf_ml/

│ ├── EA2014012400.ml -> /dvlscratch/ATM/morton/EHRATM_ERA_TESTDATA/2014/01/24/EA2014012400.ml

│ └── EA2014012403.ml -> /dvlscratch/ATM/morton/EHRATM_ERA_TESTDATA/2014/01/24/EA2014012403.ml

├── tmpmetdata-ecmwf_sfc/

│ ├── EA2014012400.sfc -> /dvlscratch/ATM/morton/EHRATM_ERA_TESTDATA/2014/01/24/EA2014012400.sfc

│ └── EA2014012403.sfc -> /dvlscratch/ATM/morton/EHRATM_ERA_TESTDATA/2014/01/24/EA2014012403.sfc

├── ungrib_rundir_ecmwf_ml/

│ ├── GEOG_DATA -> /dvlscratch/ATM/morton/WRFDistributions/WRFV4.3/GEOG_DATA/

│ ├── WPS/

│ └── WRF/

└── ungrib_rundir_ecmwf_sfc/

├── GEOG_DATA -> /dvlscratch/ATM/morton/WRFDistributions/WRFV4.3/GEOG_DATA/

├── WPS/

└── WRF/

22 directories, 9 files

Each of the run directories is self-contained and all set up for execution of the specific case components, and the interested user can go into them and rerun for experimentation and/or troubleshooting.