Overview

Introduction

The nwpservice package presents a Python-based API to components of WPS/WRF and Flexpart WRF, allowing for the creation of flexible workflows for weather and ATM simulations. Through use of the APIs presented by this package, Python programs can be built to execute individual, standalone model components for testing, development, or as part of an overall staging activity. Or, a Python program can be built to construct a workflow of the components in a loosely-coupled fashion. Both approaches will be demonstrated within this documentation.

The original motivation for the development of the nwpservice package was to provide a complexity-hiding abstraction for the EHRATM project, making it easier for developers to build a variety of workflows for generating WRF output, which would be used to drive Flexpart WRF ATM simulations.

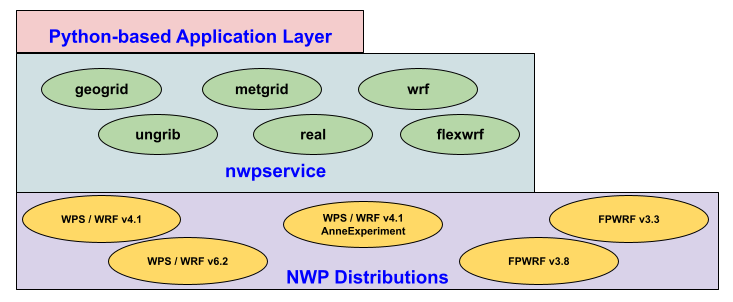

We can envision a layered architecture with nwpservice sandwiched between an upper application layer and a lower layer consisting of a collection of WPS/WRF and Flexpart WRF model distributions. The Python-based Application Layer will typically consist of Python programs that utilize the individual nwpservice components in standalone fashion, but can chain these components together to form flexible workflows. Alternatively, the “application” may be a higher level Python package (this is the case with EHRATM) that further abstracts access - hiding details - to the underlying models.

The underlying NWP Distributions layer is a collection of locally-installed WPS/WRF and Flexpart WRF models. They are assumed to be installed in a standardised way per the nwp_install component (description available in main EHRATM documentation). The nwp_install component will acquire and install specified model software in a programmatic way, so that the installation is performed exactly the same way every time, and this is followed by testing to ensure that the software is installed correctly. So, the nwpservice package depends on a correct underlying NWP Distributions layer, but this provides a degree of stability to ensure that developers at the application layer will experience correct model behaviour through use of the nwpservice package.

Although designed with EHRATM in mind, a primary goal has been to make this package application-independent so that developers may build WPS/WRF and Flexpart WRF applications, ignoring the complexities inherent in the installation and use of the original model software. The current invocation of EHRATM is just one of many possibilities (and currently, the only implementation so far) that depends on the correct implementation of an underlying nwpservice package.

The nwpservice package is intended to provide ample abstraction of the model run, so that the user of these components generally needs to specify only locations of various directories, as well as a correct namelist for the model. At this low level, the components make no decisions on their own - they simply work with what they are given and raise exceptions if something goes wrong. This is intended to separate the policy (how the component is used) from the implementation (how the component does what it’s told to do). In this way, the components can be used in a wide variety of scenarios, dictated by the arguments provided to the APIs. A change in policy - how the component is used - should not require any change of code within the nwpservice component itself.

[Added 05 April 2024] - The wrf module of nwpservice assumes that the path to a valid and correct namelist.input file will be provided. Very little error-checking of this namelist.input is performed at this low-level, the assumption being that those who create the file will perform any desired error-checking. Recently, a new class, NamelistInputWriter has been added to the wrf module - this class will - given a namelist template and a dictionary of desired changes relative to the template - create a custom namelist.input, usable by the wrf module. It is provided in nwpservice as a utility for higher layers (e.g. ehratm) as they set up the environmented needed for the wrf module. More detail is available in ../TechNotes/NamelistInputWriterDemo/index.

Requirements

The nwpservice components depend on

WPS/WRF and Flexpart WRF distributions that have been installed in a standard way. Although this standard generally adheres to a typical installation as described by the model developers, the components rely on the presence of a custom script for creating virtual model environments (directory in user space with links to the original files), and then using the virtual model environment. It’s not a radical departure, but developers need to be aware of this (discussed a bit more in Technical Notes.

A compatible Python 3 environment. To the best of my knowledge, this will all work with the Python 3.6.8 installed in the CTBTO RHEL 7 (or CentOS 7 VM) environment, except for the need to use an additional f90nml package. However, to ensure standardization a Conda environment has been created, and all development and testing has been done with this (used for all of the EHRATM components). The nwpservice has not been tested outside of this Conda environment.

Demo Preview

Although presented in more depth in Technical Notes, the following is a brief illustration of how a Python application would make use of the nwpservice package to run a single component in WPS. Creating more complex, and full, simulations would simply be a matter of building code that calls other similar components and chains them together in the desired workflow.

In this excerpt, we demonstrate how a metgrid object is created, assuming that correct arguments have been prepared beforehand, then set up and run.

import nwpservice.wps.metgrid as metgrid

.

.

.

metgrid_obj = metgrid.Metgrid(

wpswrf_distro_path=self._wpswrf_distro,

wpswrf_rundir=self._testrun_dir,

namelist_wps=namelist_wps,

ungribbed_data=ungribbed_testdata,

geogriddatadir=self._testcase_geogrid_data_dir,

output_dir=self._output_stage_dir,

numpes=int(self._num_mpi_tasks_wps),

mpirun_path=self._mpirun,

log_level=logging.DEBUG

)

metgrid_obj.setup()

output_manifest = metgrid_obj.run()

print('output_manifest: %s' % output_manifest)

When complete, and output_manifest is available, and this can be used to access the output for a following stage in the workflow

output_manifest: {'rundir': '/home/ctbtuser/tmp/metgrid_test_20230813.192637/WPS', 'metgrid': {'files': {'met_em.d01.2017-10-23_03:00:00.nc': {'bytes': 2904236}, 'met_em.d01.2017-10-23_06:00:00.nc': {'bytes': 2904236}, 'met_em.d02.2017-10-23_03:00:00.nc': {'bytes': 1632560}, 'met_em.d02.2017-10-23_06:00:00.nc': {'bytes': 1632560}}}}

Beyond the ability to create flexible workflows, this focus on standalone, loosely-coupled distributed components means that workflow developers can create staging processes, in which certain again (for example, ungribbing the met files) can be performed once on a set of input files, with the output staged - or even archived - for use by additional workflows at a later time. Finally, perhaps the greatest advantage of this design is that developers can focus on a single component of interest for troubleshooting or for experimenting with potential new features, all without having to worry about an overall large simulation package.