Introduction to Use of ehratmwf.py

This section is written for the new user who wants to get a feeling for the how the EHRATM workflow is used, without getting buried in the details in setting up the environment to make this happen. Therefore, we make the assumption that somebody has already set up things like environment variables, Python Conda environment, symbolic links to important utilities, access to the code repository, and adjustments to defaults files within the code repository. The new user is encouraged to find someone to do this for them, but if they really want to do it themselves, a discussion of the setup is available in the companion document, Environment Setup. In the future, the procedures outlined in that document might be useful for building a script to automate the environment setup.

For now, we will assume that the new user has an environment ready to go, and we will proceed in an incremental fashion, starting with simple workflows and evolving towards full and more interesting workflows. We will begin with workflows based on the use of GFS global meteorology files available in the CTBTO devlan file system, and that will be followed by examples using ECMWF ERA custom meteorology files, which adds a level of complexity to the workflow.

When the new user has finished the routines in this document, we expect that they will have a general overview of what the system is capable of, and will be well-positioned to start trying workflows of their own, and begin learning about some of the other details in a workflow.

Preliminaries

We start with the help message of ehratmwf.py

$ ehratmwf.py --help

usage: ehratmwf.py [-h] -n WF_NAMELIST_PATH

Enhanced High Res ATM

optional arguments:

-h, --help show this help message and exit

-n WF_NAMELIST_PATH, --namelist WF_NAMELIST_PATH

path to workflow namelist

In the following examples you will see workflow_rootdir entry in the workflow namelists. It is important that you set this to an existing directory for which you have write permissions. This is an option entry, however, and if you don’t use it the default is typically /tmp which may or may not be acceptable on the system that you are using. If you’re not sure, you should inquire about this.

Each of the workflows described below will create a subdirectory underneath the workflow_rootdir, of the form

ehratmwf_20231121_034006.925185

where the numbers are a timestamp indicating when the workflow started to run.

GFS-based Workflow Examples

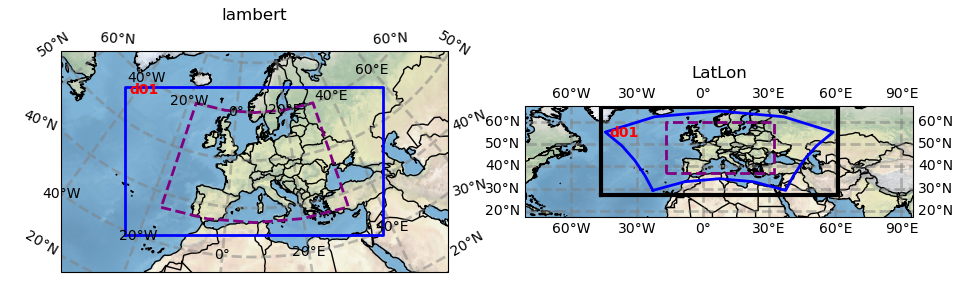

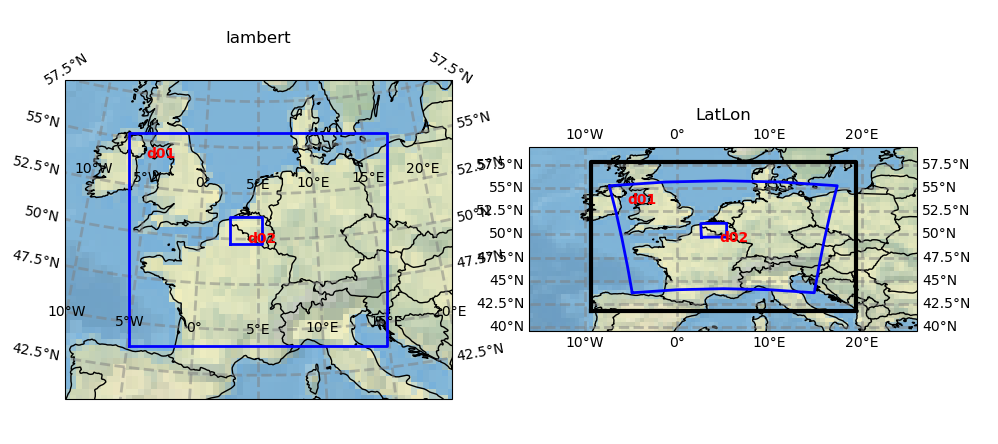

For this example we use the following 1-nest domain, d01 for driving the WRF simulation, with an embedded Flexpart OUTGRID with LL (37N, 17W) and UR (60N, 32E)

We start by defining a simple workflow whose sole purpose is to ungrib a set of GFS meteorology files, available on CTBTO devlan in /ops/data/atm/live/ncep. The workflow namelist (wfnamelist) follows

wfnamelist.gfsungrib [Download]

&control

workflow_list = 'ungrib'

workflow_rootdir = ‘/dvlscratch/ATM/morton/tmp’

/

&time

start_time = '2023112000'

end_time = '2023112006'

wrf_spinup_hours = 0

/

&grib_input1

type = 'gfs_ctbto'

subtype = '0.5.fv3'

hours_intvl = 3

rootdir = '/ops/data/atm/live/ncep'

/

Again, the workflow_rootdir entry should be modified to an already-existing user-writable directory.

We can run this easily as follows

$ ehratmwf.py -n wfnamelist.gfsungrib

2023-11-21:00:37:11 --> Workflow started

2023-11-21:00:37:11 --> Start process_namelist()

2023-11-21:00:37:11 --> Started run_workflow(): /dvlscratch/ATM/morton/tmp/ehratmwf_20231121_003711.383996

2023-11-21:00:37:11 --> Start run_ungrib()

-----------------------

Workflow Events Summary

-----------------------

2023-11-21:00:37:11 --> Workflow started

2023-11-21:00:37:11 --> Start process_namelist()

2023-11-21:00:37:11 --> Started run_workflow(): /dvlscratch/ATM/morton/tmp/ehratmwf_20231121_003711.383996

2023-11-21:00:37:11 --> Start run_ungrib()

2023-11-21:00:37:23 --> Workflow completed...

In the workflow directory, we look under the ungrib_rundir_gfs_ctbto/ directory in the WPS subdirectory and see the three ungrib files, GFS:*

$ ls /dvlscratch/ATM/morton/tmp/ehratmwf_20231121_003711.383996/ungrib_rundir_gfs_ctbto/WPS

geogrid GRIBFILE.AAB namelist.wps.all_options ungrib.exe

geogrid.exe GRIBFILE.AAC namelist.wps.fire ungrib.log

GFS:2023-11-20_00 link_grib.csh namelist.wps.global util

GFS:2023-11-20_03 metgrid namelist.wps.nmm Vtable

GFS:2023-11-20_06 metgrid.exe README

GRIBFILE.AAA namelist.wps ungrib

Congratulations, you’ve just run a single component of the WPS/WRF/FlexpartWRF workflow.

Let’s try one more single component, and after that we’ll try to combine them.

wfnamelist.geogrid [Download]

&control

workflow_list = 'geogrid'

workflow_rootdir = ‘/dvlscratch/ATM/morton/tmp’

/

&domain_defn

domain_defn_path = 'eu_domain.nml'

/

&geogrid

num_mpi_tasks = 8

/

This requires another namelist to define the modeling domain in detail

eu_domain.nml [Download]

&domain_defn

parent_id = 1,

parent_grid_ratio = 1,

i_parent_start = 1,

j_parent_start = 1,

e_we = 224,

e_sn = 128,

geog_data_res = '10m',

dx = 27000,

dy = 27000,

map_proj = 'lambert',

ref_lat = 49.826,

ref_lon = 7.359,

truelat1 = 49.826,

truelat2 = 49.826,

stand_lon = 7.359,

/

We run this workflow

$ ehratmwf.py -n wfnamelist.geogrid

2023-11-21:01:17:05 --> Workflow started

2023-11-21:01:17:05 --> Start process_namelist()

2023-11-21:01:17:05 --> Started run_workflow(): /dvlscratch/ATM/morton/tmp/ehratmwf_20231121_011705.562222

2023-11-21:01:17:05 --> Start run_geogrid()

-----------------------

Workflow Events Summary

-----------------------

2023-11-21:01:17:05 --> Workflow started

2023-11-21:01:17:05 --> Start process_namelist()

2023-11-21:01:17:05 --> Started run_workflow(): /dvlscratch/ATM/morton/tmp/ehratmwf_20231121_011705.562222

2023-11-21:01:17:05 --> Start run_geogrid()

2023-11-21:01:17:27 --> Workflow completed...

and looking within the run directory

$ ls /dvlscratch/ATM/morton/tmp/ehratmwf_20231121_011705.562222/geogrid_rundir/WPS

geo_em.d01.nc geogrid.log.0003 metgrid namelist.wps.nmm

geogrid geogrid.log.0004 metgrid.exe README

geogrid.exe geogrid.log.0005 namelist.wps ungrib

geogrid.log.0000 geogrid.log.0006 namelist.wps.all_options ungrib.exe

geogrid.log.0001 geogrid.log.0007 namelist.wps.fire util

geogrid.log.0002 link_grib.csh namelist.wps.global

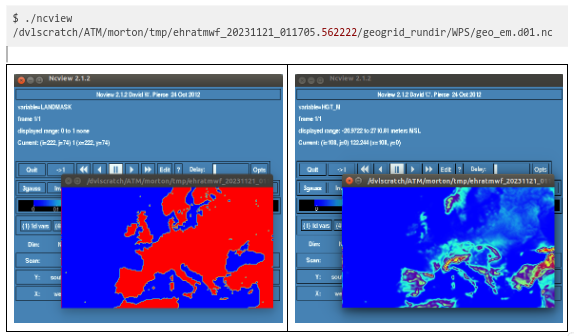

we find a fully functional run directory for geogrid. If desired, the namelist.wps could be edited, and geogrid.exe could be run to produce new output, geo_em.d01.nc.

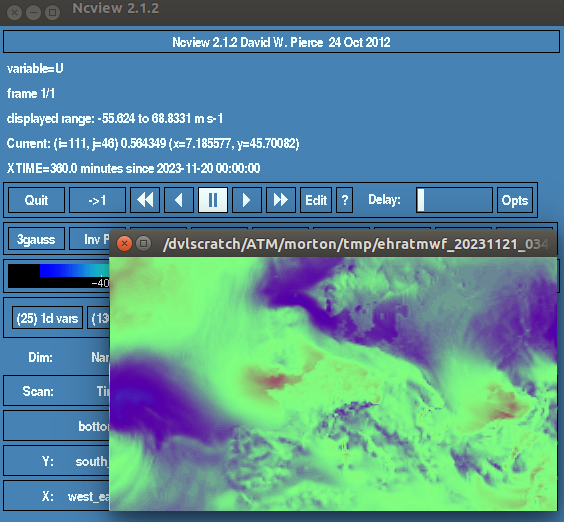

This is viewable with ncview.

$ ncview /dvlscratch/ATM/morton/tmp/ehratmwf_20231121_011705.562222/geogrid_rundir/WPS/geo_em.d01.nc

Next, let’s combine three components - ungrib, geogrid and metgrid - to perform all the WRF preprocessing

wfnamelist.wps [Download]

&control

workflow_list = 'ungrib', 'geogrid', 'metgrid'

workflow_rootdir = ‘/dvlscratch/ATM/morton/tmp’

/

&time

start_time = '2023112000'

end_time = '2023112006'

wrf_spinup_hours = 0

/

&grib_input1

type = 'gfs_ctbto'

subtype = '0.5.fv3'

hours_intvl = 3

rootdir = '/ops/data/atm/live/ncep'

/

&domain_defn

domain_defn_path = 'eu_domain.nml'

/

&geogrid

num_mpi_tasks = 8

/

&metgrid

hours_intvl=3,

num_mpi_tasks = 16

/

$ ehratmwf.py -n wfnamelist.wps

2023-11-21:01:56:06 --> Workflow started

2023-11-21:01:56:06 --> Start process_namelist()

2023-11-21:01:56:06 --> Started run_workflow(): /dvlscratch/ATM/morton/tmp/ehratmwf_20231121_015606.358078

2023-11-21:01:56:06 --> Start run_ungrib()

2023-11-21:01:56:15 --> Start run_geogrid()

2023-11-21:01:56:34 --> Start run_metgrid()

-----------------------

Workflow Events Summary

-----------------------

2023-11-21:01:56:06 --> Workflow started

2023-11-21:01:56:06 --> Start process_namelist()

2023-11-21:01:56:06 --> Started run_workflow(): /dvlscratch/ATM/morton/tmp/ehratmwf_20231121_015606.358078

2023-11-21:01:56:06 --> Start run_ungrib()

2023-11-21:01:56:15 --> Start run_geogrid()

2023-11-21:01:56:34 --> Start run_metgrid()

2023-11-21:01:56:41 --> Workflow completed...

Now when we look at the output directory we see a run directory for each component

$ tree -L 1 /dvlscratch/ATM/morton/tmp/ehratmwf_20231121_015606.358078

/dvlscratch/ATM/morton/tmp/ehratmwf_20231121_015606.358078

├── geogrid_namelist.wps

├── geogrid_rundir

├── metgrid_rundir

├── namelist.wps

├── namelist.wps_gfs_ctbto

├── tmpmetdata-gfs_ctbto

└── ungrib_rundir_gfs_ctbto

4 directories, 3 files

As before, one could play around in this directory and rerun metgrid under different conditions (for debugging, for example) if desired.

$ ls /dvlscratch/ATM/morton/tmp/ehratmwf_20231121_015606.358078/metgrid_rundir/WPS

geo_em.d01.nc metgrid.log.0001 metgrid.log.0014

geogrid metgrid.log.0002 metgrid.log.0015

geogrid.exe metgrid.log.0003 namelist.wps

GFS:2023-11-20_00 metgrid.log.0004 namelist.wps.all_options

GFS:2023-11-20_03 metgrid.log.0005 namelist.wps.fire

GFS:2023-11-20_06 metgrid.log.0006 namelist.wps.global

link_grib.csh metgrid.log.0007 namelist.wps.nmm

met_em.d01.2023-11-20_00:00:00.nc metgrid.log.0008 README

met_em.d01.2023-11-20_03:00:00.nc metgrid.log.0009 ungrib

met_em.d01.2023-11-20_06:00:00.nc metgrid.log.0010 ungrib.exe

metgrid metgrid.log.0011 util

metgrid.exe metgrid.log.0012

metgrid.log.0000 metgrid.log.0013

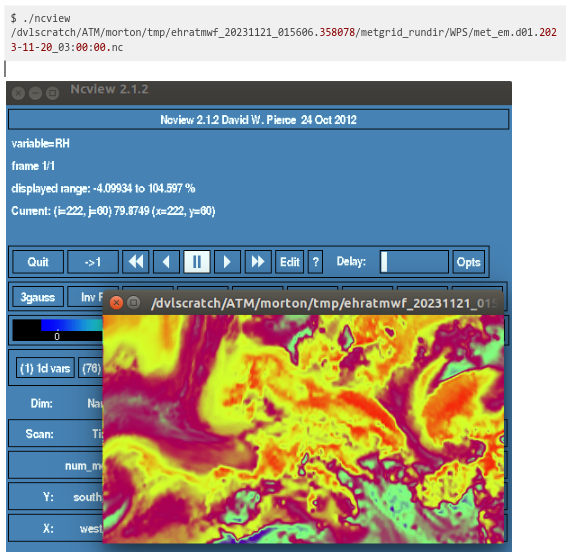

$ ncview /dvlscratch/ATM/morton/tmp/ehratmwf_20231121_015606.358078/metgrid_rundir/WPS/met_em.d01.2023-11-20_03:00:00.nc

The above ungrib and geogrid examples were given just for a familiarization in running components, but it will likely be common to want to run a workflow of components, such as the full WPS workflow of ungrib, geogrid and metgrid, as shown above.

We can extend this even further and add in the WRF components, real and wrf, to the workflow namelist. In this case, we currently need to have a correct namelist.input for the workflow (the construction of that has not yet been automated).

namelist.input.eu [Download]

Note in the following that you will need to change the path of the namelist.input in the real and wrf sections

wfnamelist.wps+wrf [Download]

&control

workflow_list = 'ungrib', 'geogrid', 'metgrid', 'real', 'wrf'

workflow_rootdir = ‘/dvlscratch/ATM/morton/tmp’

/

&time

start_time = '2023112000'

end_time = '2023112006'

wrf_spinup_hours = 0

/

&grib_input1

type = 'gfs_ctbto'

subtype = '0.5.fv3'

hours_intvl = 3

rootdir = '/ops/data/atm/live/ncep'

/

&domain_defn

domain_defn_path = 'eu_domain.nml'

/

&geogrid

num_mpi_tasks = 8

/

&metgrid

hours_intvl=3,

num_mpi_tasks = 16

/

&real

num_nests = 1

hours_intvl = 3

! This needs to be a full, absolute path

anne_bypass_namelist_input = '/dvlscratch/ATM/morton/NewUserHowTo/namelist.input.eu'

num_mpi_tasks = 16

/

&wrf

num_nests = 1

hours_intvl = 3

anne_bypass_namelist_input = '/dvlscratch/ATM/morton/NewUserHowTo/namelist.input.eu'

num_mpi_tasks = 16

/

Then, running the workflow

$ ehratmwf.py -n wfnamelist.wps+wrf

2023-11-21:03:40:06 --> Workflow started

2023-11-21:03:40:06 --> Start process_namelist()

2023-11-21:03:40:06 --> Started run_workflow(): /dvlscratch/ATM/morton/tmp/ehratmwf_20231121_034006.925185

2023-11-21:03:40:06 --> Start run_ungrib()

2023-11-21:03:40:16 --> Start run_geogrid()

2023-11-21:03:40:35 --> Start run_metgrid()

2023-11-21:03:40:42 --> Start run_real()

2023-11-21:03:40:47 --> Start run_wrf()

-----------------------

Workflow Events Summary

-----------------------

2023-11-21:03:40:06 --> Workflow started

2023-11-21:03:40:06 --> Start process_namelist()

2023-11-21:03:40:06 --> Started run_workflow(): /dvlscratch/ATM/morton/tmp/ehratmwf_20231121_034006.925185

2023-11-21:03:40:06 --> Start run_ungrib()

2023-11-21:03:40:16 --> Start run_geogrid()

2023-11-21:03:40:35 --> Start run_metgrid()

2023-11-21:03:40:42 --> Start run_real()

2023-11-21:03:40:47 --> Start run_wrf()

2023-11-21:03:43:07 --> Workflow completed...

We see the wrfout files in the run directory

$ ls /dvlscratch/ATM/morton/tmp/ehratmwf_20231121_034006.925185/wrf_rundir/WRF

.

.

.

ozone_plev.formatted wrfbdy_d01

p3_lookupTable_1.dat-2momI_v5.1.6_oldDimax wrf.exe

p3_lookupTable_1.dat-3momI_v5.1.6 wrffdda_d01

p3_lookupTable_1.dat-3momI_v5.1.6.gz wrfinput_d01

p3_lookupTable_2.dat-4.1 wrfout_d01_2023-11-20_00:00:00

README.namelist wrfout_d01_2023-11-20_03:00:00

README.physics_files wrfout_d01_2023-11-20_06:00:00

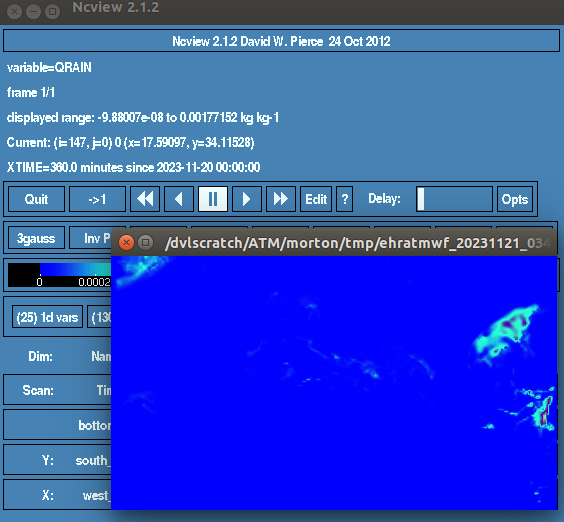

and can view them

$ ncview ‘dvlscratch/ATM/morton/tmp/ehratmwf_20231121_034006.925185/wrf_rundir/WRF/wrfout_d01_2023-11-20_06:00:00

Next, we’ll add the Flexpart WRF component. In this case we’ll run it in standalone mode first, using the wrfout files from the previous workflow as input. This is much like a number of scenarios where we might want to generate a set of WRF output files and then drive a collection of different Flexpart simulations with the same files. We use one workflow to generate the WRF output files, then a workflow for each of the Flexpart simulations that we want.

As in running WRF, we currently need to provide a correct flexp_input.txt.

flexp_input.txt.eu [Download]

Then, the wfnamelist contains entries for flexwrf and for the srm postprocessing, and the flexwrf group specifies the location of the its needed wrfout files, which are available from the previous workflow

wfnamelist.flexwrf [Download]

&control

workflow_list = 'flexwrf', 'srm'

workflow_rootdir = '/dvlscratch/ATM/morton/tmp'

/

&time

start_time = '2023112000'

end_time = '2023112006'

wrf_spinup_hours = 0

/

&flexwrf

wrfout_path = '/dvlscratch/ATM/morton/tmp/ehratmwf_20231121_034006.925185/wrf_rundir/WRF'

wrfout_num_nests = 1

wrfout_hours_intvl = 3

bypass_user_input = '/dvlscratch/ATM/morton/NewUserHowTo/flexp_input.txt.eu'

/

&srm

levels_list = 1

multiplier = 1.0e-12

/

We run it

$ ehratmwf.py -n wfnamelist.flexwrf

2023-11-23:20:34:21 --> Workflow started

2023-11-23:20:34:21 --> Start process_namelist()

2023-11-23:20:34:21 --> Started run_workflow(): /dvlscratch/ATM/morton/tmp/ehratmwf_20231123_203421.528151

2023-11-23:20:34:21 --> Start run_flexwrf()

2023-11-23:20:36:53 --> Start run_srm()

.

.

.

-----------------------

Workflow Events Summary

-----------------------

2023-11-23:20:34:21 --> Workflow started

2023-11-23:20:34:21 --> Start process_namelist()

2023-11-23:20:34:21 --> Started run_workflow(): /dvlscratch/ATM/morton/tmp/ehratmwf_20231123_203421.528151

2023-11-23:20:34:21 --> Start run_flexwrf()

2023-11-23:20:36:53 --> Start run_srm()

2023-11-23:20:36:53 --> Workflow completed...

and find the expected Flexpart WRF binary output files and SRM files

$ ls /dvlscratch/ATM/morton/tmp/ehratmwf_20231123_203421.528151/flexwrf_rundir/flexwrf_rundir

AVAILABLE01 grid_conc_20231120030000_001

AVAILABLE2.sample grid_conc_20231120040000_001

AVAILABLE3.sample grid_conc_20231120050000_001

dates grid_conc_20231120060000_001

flexwrf.input header

flexwrf.input.backward1.sample IGBP_int1.dat

flexwrf.input.backward2.sample latlon_corner.txt

flexwrf.input.forward1.sample latlon.txt

flexwrf.input.forward2.sample OH_7lev_agl.dat

flexwrf_mpi README_FIRST.txt.sample

flexwrf_omp surfdata.t

flexwrf_serial surfdepo.t

grid_conc_20231120010000_001 wrfout01

grid_conc_20231120020000_001

$ ls /dvlscratch/ATM/morton/tmp/ehratmwf_20231123_203421.528151/srm_rundir

control_level_01

MyRelease.fp.20231120000000.l1.l0.dry.srm

MyRelease.fp.20231120000000.l1.l0.wet.srm

MyRelease.fp.20231120000000.l1.srm

Finally, for completeness, we demonstrate the creation and execution of a full WPS, WRF, Flexpart WRF workflow, using the same WRF and Flexpart namelists as above.

wfnamelist.wps+wrf+flexwrf [Download]

&control

workflow_list = 'ungrib', 'geogrid', 'metgrid', 'real', 'wrf', 'flexwrf', 'srm'

workflow_rootdir = '/dvlscratch/ATM/morton/tmp'

!log_level = 'debug'

/

&time

start_time = '2023112000'

end_time = '2023112006'

wrf_spinup_hours = 0

/

&grib_input1

type = 'gfs_ctbto'

subtype = '0.5.fv3'

hours_intvl = 3

rootdir = '/ops/data/atm/live/ncep'

/

&domain_defn

domain_defn_path = 'eu_domain.nml'

/

&geogrid

num_mpi_tasks = 8

/

&metgrid

hours_intvl=3,

num_mpi_tasks = 16

/

&real

num_nests = 1

hours_intvl = 3

! This needs to be a full, absolute path

anne_bypass_namelist_input = '/dvlscratch/ATM/morton/NewUserHowTo/namelist.input.eu'

num_mpi_tasks = 16

/

&wrf

num_nests = 1

hours_intvl = 3

anne_bypass_namelist_input = '/dvlscratch/ATM/morton/NewUserHowTo/namelist.input.eu'

num_mpi_tasks = 16

/

&flexwrf

wrfout_num_nests = 1

wrfout_hours_intvl = 3

bypass_user_input = '/dvlscratch/ATM/morton/NewUserHowTo/flexp_input.txt.eu'

/

&srm

levels_list = 1

multiplier = 1.0e-12

/

the execution of the workflow

$ ehratmwf.py -n wfnamelist.wps+wrf+flexwrf

2023-11-23:20:46:03 --> Workflow started

2023-11-23:20:46:03 --> Start process_namelist()

2023-11-23:20:46:03 --> Started run_workflow(): /dvlscratch/ATM/morton/tmp/ehratmwf_20231123_204603.425803

2023-11-23:20:46:03 --> Start run_ungrib()

2023-11-23:20:46:12 --> Start run_geogrid()

2023-11-23:20:46:30 --> Start run_metgrid()

2023-11-23:20:46:37 --> Start run_real()

2023-11-23:20:46:41 --> Start run_wrf()

2023-11-23:20:48:11 --> Start run_flexwrf()

2023-11-23:20:50:44 --> Start run_srm()

.

.

.

-----------------------

Workflow Events Summary

-----------------------

2023-11-23:20:46:03 --> Workflow started

2023-11-23:20:46:03 --> Start process_namelist()

2023-11-23:20:46:03 --> Started run_workflow(): /dvlscratch/ATM/morton/tmp/ehratmwf_20231123_204603.425803

2023-11-23:20:46:03 --> Start run_ungrib()

2023-11-23:20:46:12 --> Start run_geogrid()

2023-11-23:20:46:30 --> Start run_metgrid()

2023-11-23:20:46:37 --> Start run_real()

2023-11-23:20:46:41 --> Start run_wrf()

2023-11-23:20:48:11 --> Start run_flexwrf()

2023-11-23:20:50:44 --> Start run_srm()

2023-11-23:20:50:44 --> Workflow completed...

and a look at the top level of the workflow output directory

$ tree -L 1 /dvlscratch/ATM/morton/tmp/ehratmwf_20231123_204603.425803

/dvlscratch/ATM/morton/tmp/ehratmwf_20231123_204603.425803

├── flexwrf_rundir

├── geogrid_namelist.wps

├── geogrid_rundir

├── metgrid_rundir

├── namelist.wps

├── namelist.wps_gfs_ctbto

├── real_rundir

├── srm_rundir

├── tmpmetdata-gfs_ctbto

├── ungrib_rundir_gfs_ctbto

├── wrfout_d01

└── wrf_rundir

9 directories, 3 files

ERA-based Workflow Examples

ERA-based workflows are more complicated because

They use a nonstandard location for met files, yet the met files are expected to adhere to the same kind of directory hierarchy (YYYY/MM/DD/) as the GFS files

ERA met files come as surface and model-level files, so both sets need to be ungribbed

Ungribbing these files requires custom Vtables to be specified

Once ungribbed, an additional step needs to be made to create pressure level ungribbed files, using the

util/calc_ecmwf_p.exeexecutable that intermittently failsMetgrid specifications require that we provide paths to all of the ungribbed files

We start with the ungribbing, as that’s where most of the work is done

wfnamelist.ecmwfungrib [Download]

&control

workflow_list = 'ungrib'

workflow_rootdir = '/dvlscratch/ATM/morton/tmp'

!log_level='DEBUG'

/

&time

start_time = '2014012400'

end_time = '2014012406'

wrf_spinup_hours = 0

/

&grib_input1

type = 'ecmwf_ml'

hours_intvl = 3

rootdir = '/dvlscratch/ATM/morton/git/high-res-atm/packages/ehratmworkflow/tests/metdata/era'

custom_vtable_path = '/dvlscratch/ATM/morton/git/high-res-atm/packages/nwpservice/tests/testcase_data/ecmwf_anne_2014_eu/WPS/Vtable.ECMWF.ml'

/

&grib_input2

type = 'ecmwf_sfc'

hours_intvl = 3

rootdir = '/dvlscratch/ATM/morton/git/high-res-atm/packages/ehratmworkflow/tests/metdata/era'

custom_vtable_path = '/dvlscratch/ATM/morton/git/high-res-atm/packages/nwpservice/tests/testcase_data/ecmwf_anne_2014_eu/WPS/Vtable.ECMWF.sfc'

/

Users will need to pay attention to actual location of the GRIB inputs and the custom Vtables. It is up to the user to arrange the ERA files in the way that we have agreed upon.

The files need to be stored in a datetime-oriented hierarchy much like the CTBTO GFS files, for example

era/2014/01/24/.We have imposed the requirement that the files are named according to the convention

EAYYYYMMDDHH.[ml|sfc]

So, in this case the file storage looks like

$ tree /dvlscratch/ATM/morton/git/high-res-atm/packages/ehratmworkflow/tests/metdata/era

/dvlscratch/ATM/morton/git/high-res-atm/packages/ehratmworkflow/tests/metdata/era

└── 2014

└── 01

└── 24

├── EA2014012400.ml

├── EA2014012400.sfc

├── EA2014012403.ml

├── EA2014012403.sfc

├── EA2014012406.ml

└── EA2014012406.sfc

3 directories, 6 files

Although the above repository may be in place on devlan for some time, new users should make sure that the paths in the wfnamelist are still valid.

Then we run

$ ehratmwf.py -n wfnamelist.ecmwfungrib

2023-11-24:02:15:28 --> Workflow started

2023-11-24:02:15:28 --> Start process_namelist()

2023-11-24:02:15:28 --> Started run_workflow(): /dvlscratch/ATM/morton/tmp/ehratmwf_20231124_021528.378569

2023-11-24:02:15:28 --> Start run_ungrib()

2023-11-24:02:15:30 --> Start run_ecmwfplevels()

-----------------------

Workflow Events Summary

-----------------------

2023-11-24:02:15:28 --> Workflow started

2023-11-24:02:15:28 --> Start process_namelist()

2023-11-24:02:15:28 --> Started run_workflow(): /dvlscratch/ATM/morton/tmp/ehratmwf_20231124_021528.378569

2023-11-24:02:15:28 --> Start run_ungrib()

2023-11-24:02:15:30 --> Start run_ecmwfplevels()

2023-11-24:02:15:31 --> Workflow completed...

There is a lot that happens here. The ungrib component is invoked twice, once for model levels and once for surface levels. Then, the calc_ecmwf_p.exe is invoked, using both sets of files as input to produce an additional set of pressure level files, all of which are used by the metgrid component.

So, , there are several run directories, each of which is self-contained and can be experimented with individually

$ ls -F /dvlscratch/ATM/morton/tmp/ehratmwf_20231124_021528.378569

ecmwfplevels_namelist.wps namelist.wps_ecmwf_sfc ungrib_rundir_ecmwf_ml/

ecmwfplevels_rundir/ tmpmetdata-ecmwf_ml/ ungrib_rundir_ecmwf_sfc/

namelist.wps_ecmwf_ml tmpmetdata-ecmwf_sfc/

Within the ecmwfplevels_rundir/ we have ungribbed model level files (ECMWF_ML:), surface files (ECMWF_SFC:) and pressure level files (PRES:).

$ ls /dvlscratch/ATM/morton/tmp/ehratmwf_20231124_021528.378569/ecmwfplevels_rundir/

ecmwf_coeffs geogrid namelist.wps.all_options README

ECMWF_ML:2014-01-24_00 geogrid.exe namelist.wps.fire ungrib

ECMWF_ML:2014-01-24_03 link_grib.csh namelist.wps.global ungrib.exe

ECMWF_ML:2014-01-24_06 logfile.log namelist.wps.nmm util

ECMWF_SFC:2014-01-24_00 metgrid PRES:2014-01-24_00

ECMWF_SFC:2014-01-24_03 metgrid.exe PRES:2014-01-24_03

ECMWF_SFC:2014-01-24_06 namelist.wps PRES:2014-01-24_06

WARNING - there is a bug in util/calc_ecmwf_p.exe that, on devlan, intermittently seg faults. Others have reported this to occur on other platforms. I estimate that this happens approximately 10-20% of the time in a somewhat random fashion. Therefore, it’s important to be aware of this and be able to adjust accordingly.

I recommend that users consider running ERA ungrib in standalone mode (as we just did, above) and ensure that it was successful. Then, as in the next example, run geogrid and metgrid, pointing to the successfully created ungribbed files, producing the met_em* files needed by the WRF model.

The domain we use

is defined in the domain definition namelist

small_domain_twonest.nml [Download]

&domain_defn

parent_id = 1, 1,

parent_grid_ratio = 1, 3,

i_parent_start = 1, 20,

j_parent_start = 1, 20,

e_we = 51, 19,

e_sn = 42, 16,

geog_data_res = '10m', '5m'

dx = 30000,

dy = 30000,

map_proj = 'lambert',

ref_lat = 50.00,

ref_lon = 5.00,

truelat1 = 60.0,

truelat2 = 30.0,

stand_lon = 5.0,

/

Then, the workflow namelist for the combined geogrid and metgrid

wfnamelist.geogrid+metgrid [Download]

&control

workflow_list = 'geogrid', 'metgrid'

workflow_rootdir = '/dvlscratch/ATM/morton/tmp'

/

&time

start_time = '2014012400'

end_time = '2014012406'

wrf_spinup_hours = 0

/

&domain_defn

domain_defn_path = 'small_domain_twonest.nml'

/

&geogrid

num_mpi_tasks = 4

/

&metgrid

ug_prefix_01 = 'ECMWF_ML'

ug_path_01 = '/dvlscratch/ATM/morton/tmp/ehratmwf_20231124_021528.378569/ecmwfplevels_rundir/WPS'

ug_prefix_02 = 'ECMWF_SFC'

ug_path_02 = '/dvlscratch/ATM/morton/tmp/ehratmwf_20231124_021528.378569/ecmwfplevels_rundir/WPS'

ug_prefix_03 = 'PRES'

ug_path_03 = '/dvlscratch/ATM/morton/tmp/ehratmwf_20231124_021528.378569/ecmwfplevels_rundir/WPS'

num_nests = 2

hours_intvl = 3

num_mpi_tasks = 4

/

Then, running it

$ ehratmwf.py -n wfnamelist.geogrid+metgrid

2023-11-24:02:54:50 --> Workflow started

2023-11-24:02:54:50 --> Start process_namelist()

2023-11-24:02:54:50 --> Started run_workflow(): /dvlscratch/ATM/morton/tmp/ehratmwf_20231124_025450.817166

2023-11-24:02:54:50 --> Start run_geogrid()

2023-11-24:02:54:59 --> Start run_metgrid()

-----------------------

Workflow Events Summary

-----------------------

2023-11-24:02:54:50 --> Workflow started

2023-11-24:02:54:50 --> Start process_namelist()

2023-11-24:02:54:50 --> Started run_workflow(): /dvlscratch/ATM/morton/tmp/ehratmwf_20231124_025450.817166

2023-11-24:02:54:50 --> Start run_geogrid()

2023-11-24:02:54:59 --> Start run_metgrid()

2023-11-24:02:55:02 --> Workflow completed...

When we look at the metgrid run directory, we see a fully functional standalone directory in which we could experiment with running again. All of the ungribbed and geogrid data (both nests) is available, as well as the output files (both nests) met_em*

$ ls /dvlscratch/ATM/morton/tmp/ehratmwf_20231124_025450.817166/metgrid_rundir/WPS

ECMWF_ML:2014-01-24_00 metgrid.exe

ECMWF_ML:2014-01-24_03 metgrid.log.0000

ECMWF_ML:2014-01-24_06 metgrid.log.0001

ECMWF_SFC:2014-01-24_00 metgrid.log.0002

ECMWF_SFC:2014-01-24_03 metgrid.log.0003

ECMWF_SFC:2014-01-24_06 namelist.wps

geo_em.d01.nc namelist.wps.all_options

geo_em.d02.nc namelist.wps.fire

geogrid namelist.wps.global

geogrid.exe namelist.wps.nmm

link_grib.csh PRES:2014-01-24_00

met_em.d01.2014-01-24_00:00:00.nc PRES:2014-01-24_03

met_em.d01.2014-01-24_03:00:00.nc PRES:2014-01-24_06

met_em.d01.2014-01-24_06:00:00.nc README

met_em.d02.2014-01-24_00:00:00.nc ungrib

met_em.d02.2014-01-24_03:00:00.nc ungrib.exe

met_em.d02.2014-01-24_06:00:00.nc util

metgrid

Of course, we could have created a wfnamelist that also ran real, wrf and flexwrf, and then we’d be done. But, consider a scenario where we might want to run multiple variations of WRF with different parameterizations, and then drive Flexpart WRF runs from each of those. In this scenario we would have already created the necessary met_em* files for ALL of the runs, and so we could simply create a wfnamelist file for each of the WRF/Flexpart WRF variations, as we demonstrate below (only one case)

Note that at this point, once the met_em* files have been created, there is nothing that’s specific to ECMWF or GFS (or other met inputs) any more (though things like num_metgrid_levels in namelist.input, etc. will be different). So, we specify the run directory created in the previous step as the source of our met_em* files

WARNING - this is a test example with a very small Nest 2, and trying to use multiple MPI tasks on it would likely fail.

wfnamelist.real+wrf+flexwrf [Download]

&control

workflow_list = 'real', 'wrf', 'flexwrf', 'srm'

workflow_rootdir = '/dvlscratch/ATM/morton'

!log_level = 'debug'

/

&domain_defn

domain_defn_path = 'small_domain_twonest.nml'

/

&time

start_time = '2014012400'

end_time = '2014012406'

wrf_spinup_hours = 0

/

&real

metgrid_path = '/dvlscratch/ATM/morton/tmp/ehratmwf_20231124_025450.817166/metgrid_rundir/WPS'

num_nests = 2

hours_intvl = 3

anne_bypass_namelist_input = '/dvlscratch/ATM/morton/NewUserHowTo/ERA/namelist.input.wrf'

/

&wrf

num_nests = 2

hours_intvl = 3

anne_bypass_namelist_input = '/dvlscratch/ATM/morton/NewUserHowTo/ERA/namelist.input.wrf'

/

&flexwrf

wrfout_num_nests = 2

wrfout_hours_intvl = 3

bypass_user_input = '/dvlscratch/ATM/morton/NewUserHowTo/ERA/flxp_input_binaryout.txt'

/

&srm

levels_list = 1

multiplier = 1.0e-12

/

Then, we run it

$ ehratmwf.py -n wfnamelist.real+wrf+flexwrf

2023-11-24:03:28:50 --> Workflow started

2023-11-24:03:28:50 --> Start process_namelist()

2023-11-24:03:28:50 --> Started run_workflow(): /dvlscratch/ATM/morton/ehratmwf_20231124_032850.137079

2023-11-24:03:28:50 --> Start run_real()

2023-11-24:03:28:52 --> Start run_wrf()

2023-11-24:03:30:51 --> Start run_flexwrf()

2023-11-24:03:34:15 --> Start run_srm()

.

.

.

-----------------------

Workflow Events Summary

-----------------------

2023-11-24:03:28:50 --> Workflow started

2023-11-24:03:28:50 --> Start process_namelist()

2023-11-24:03:28:50 --> Started run_workflow(): /dvlscratch/ATM/morton/ehratmwf_20231124_032850.137079

2023-11-24:03:28:50 --> Start run_real()

2023-11-24:03:28:52 --> Start run_wrf()

2023-11-24:03:30:51 --> Start run_flexwrf()

2023-11-24:03:34:15 --> Start run_srm()

2023-11-24:03:34:15 --> Workflow completed...

and view the workflow directory

$ tree -L 1 -F /dvlscratch/ATM/morton/ehratmwf_20231124_032850.137079

/dvlscratch/ATM/morton/ehratmwf_20231124_032850.137079

├── flexwrf_rundir/

├── real_rundir/

├── srm_rundir/

├── wrfout_d01/

├── wrfout_d02/

└── wrf_rundir/

6 directories, 0 files

and the SRM file (still has the bug that tries to print release location in map coords rather than latlong)

$ cat /dvlscratch/ATM/morton/ehratmwf_20231124_032850.137079/srm_rundir/NONE.fp.20140124000000.l1.srm

******* ****** 20140124 06 20140124 00 0.1000000E+16 6 1 1 0.37 0.22 "NONE"

50.06 3.45 1 0.8408114E+03

50.06 3.82 1 0.4162801E+03

50.06 3.45 2 0.9604443E+03

.

.

.

49.61 6.02 6 0.1827324E-01

48.71 6.39 6 0.5225454E-02

48.94 6.39 6 0.5298369E-01

49.16 6.39 6 0.2253707E-01

49.39 6.39 6 0.2027596E-03

This is one successful output. If we wanted a physics-varying ensemble of WRF and Flexpart WRF runs, all based on the same set of inputs, we could simply run this wfnamelist multiple times with different namelist.input files for each case.